In operations research and optimization, modeling is one piece of the puzzle. A lot more goes into the DecisionOps process of successfully implementing and observing optimization models: identifying and translating business rules, accurately modeling the problem, testing the model on real data, deploying to production, linking and managing models, reporting to stakeholders, and more.

And while modeling itself has many frameworks, model testing isn’t as standardized, leaving teams to manually build testing code and processes that are difficult to maintain. In order for projects to make it off the ground, model testing needs to be accessible, explainable, and repeatable. Building trust with stakeholders starts with clearly demonstrating why something happened — and this often starts with “Well, let’s test it.”

There’s consensus surrounding the importance of testing…

“The combinatorial explosion of possible scenarios in OR makes comprehensive testing essential for maintaining solution quality and reliability.” - Feasible newsletter

But how it’s done, who performs it, and how it’s integrated into the business is often less clear….

“...how do you test your optimization models that are deployed in production? How do you test your model before and after providing a solution?”- LinkedIn

“I have developed an inventory optimization model for my warehouse, and I want to know how to validate this model, perform user acceptance testing, and deploy it to [a] production environment?” - OR Stack Exchange

In this post, we’ll walk through how strategic model testing answers questions such as:

- What happens to my KPIs if I update a constraint?

- How will my model perform under different conditions?

- Which model performs better on production data?

- Does the new model meet our business requirements?

We’ll review these questions through the lens of Nextmv’s testing framework.

Decision model testing with Nextmv

Testing can start small. It can start in notebooks. On historical data sets. On your local machine. But as the business grows, managing a large test code base, the input data, compute infrastructure, monitoring capabilities, and collaboration interfaces can be cumbersome.

Using the Nextmv platform with testing functionality built specifically for operations research allows decision modelers to focus on modeling and building value for the business. Use Nextmv to streamline your testing process:

- Manage model versions and instances

- Manage data for testing with input sets

- Create and analyze experiments in the Nextmv UI

- Perform experiments on production infrastructure

- Invite teammates to a common optimization hub

- Share interactive results with teammates by copying a link

We’ll walk through a few types of testing using a routing model example.

Scenario testing

While there are tools such as batch tests and the compare runs feature that allow you to perform ad hoc analysis on model performance, scenario testing is often a natural starting point for many practitioners. Scenario tests allow you to see how your model performs under different circumstances. You can vary inputs, configurable options, and model instances to understand the impacts that each combination has on the plans created.

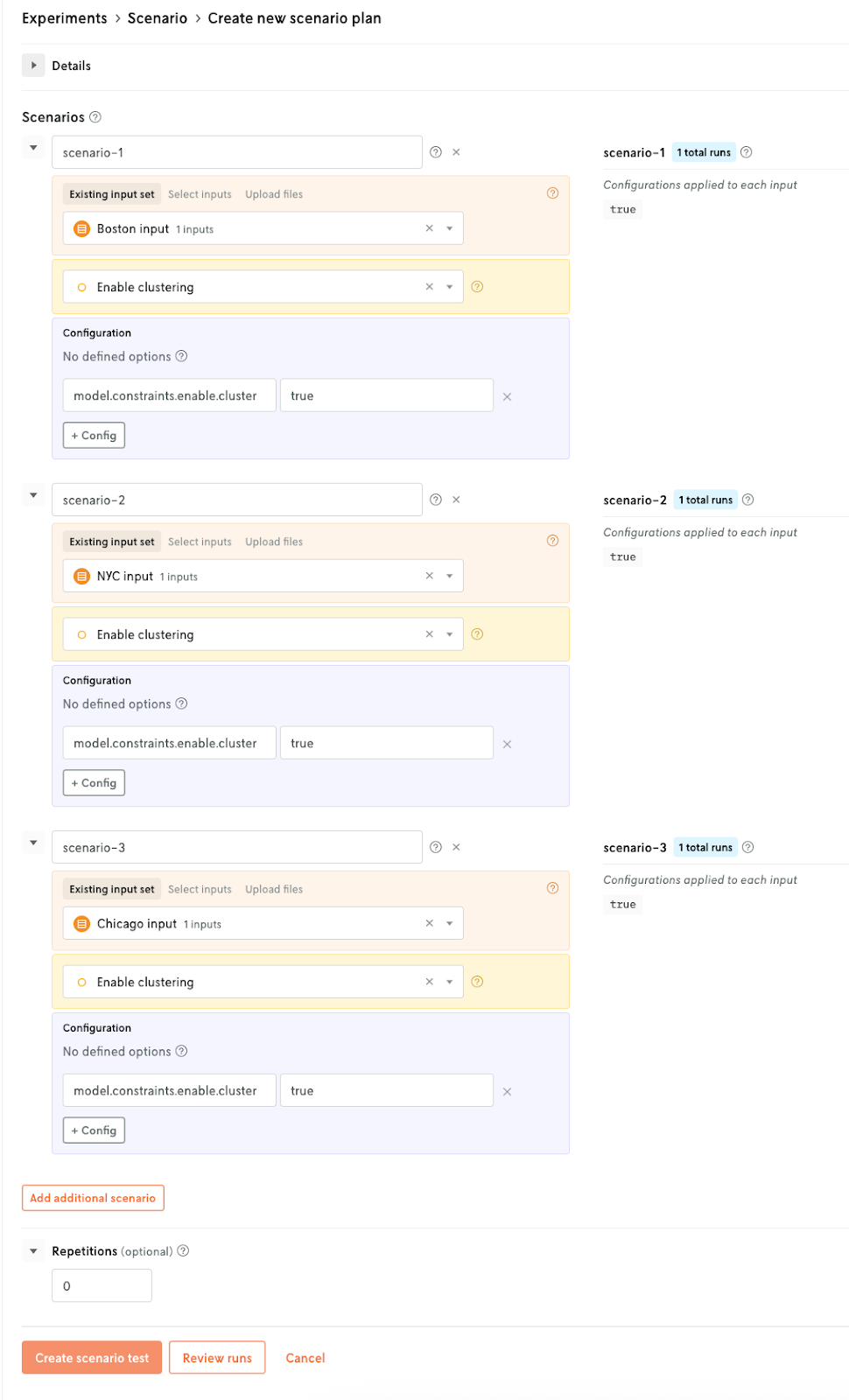

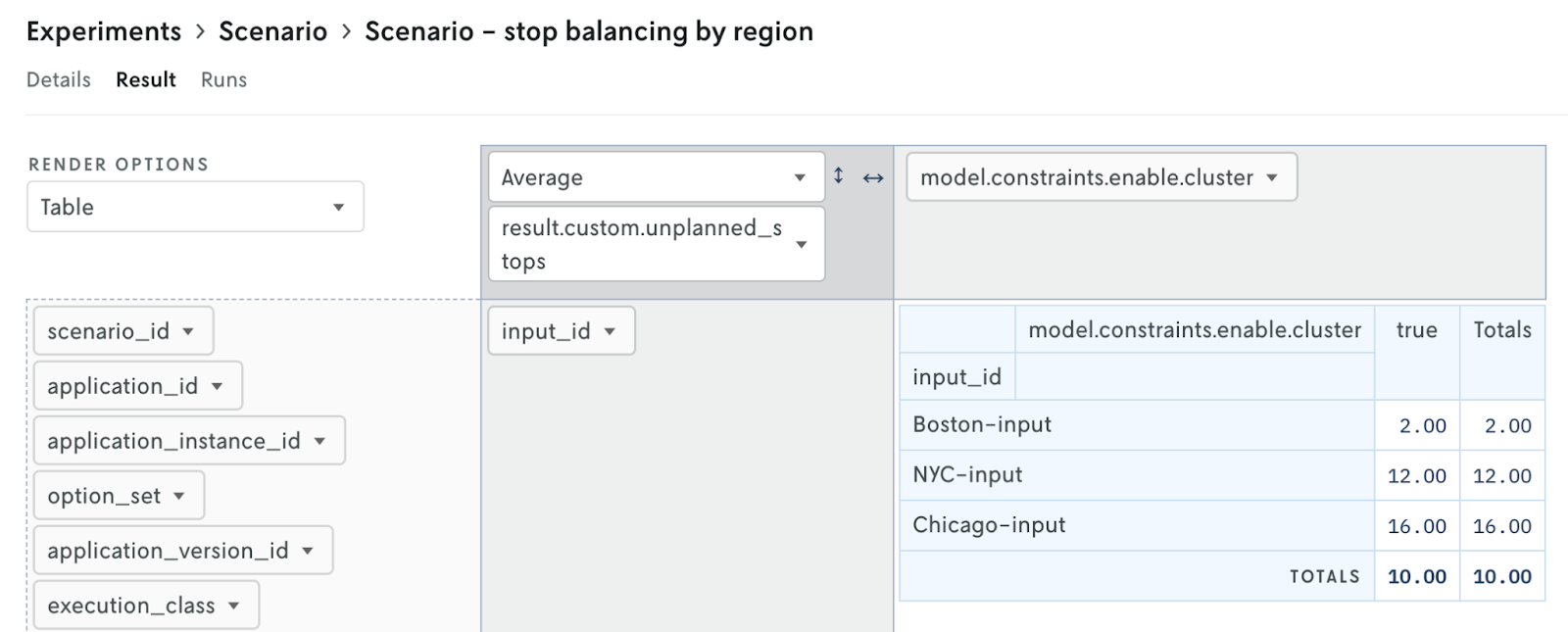

In the routing example below, we’ll look at what happens to the number of unplanned stops per region when we enable clustering for our model. We set up three scenarios, one to represent each of our biggest regions (Boston, New York City, and Chicago), using the model with clustering enabled.

In the results, Boston has only 2 unplanned stops, but we see 12 and 16 for NYC and Chicago, respectively.

We’ll need to dig further into the NYC and Chicago regions to understand what model updates will be required to bring down the number of unplanned stops in those regions.

Acceptance testing

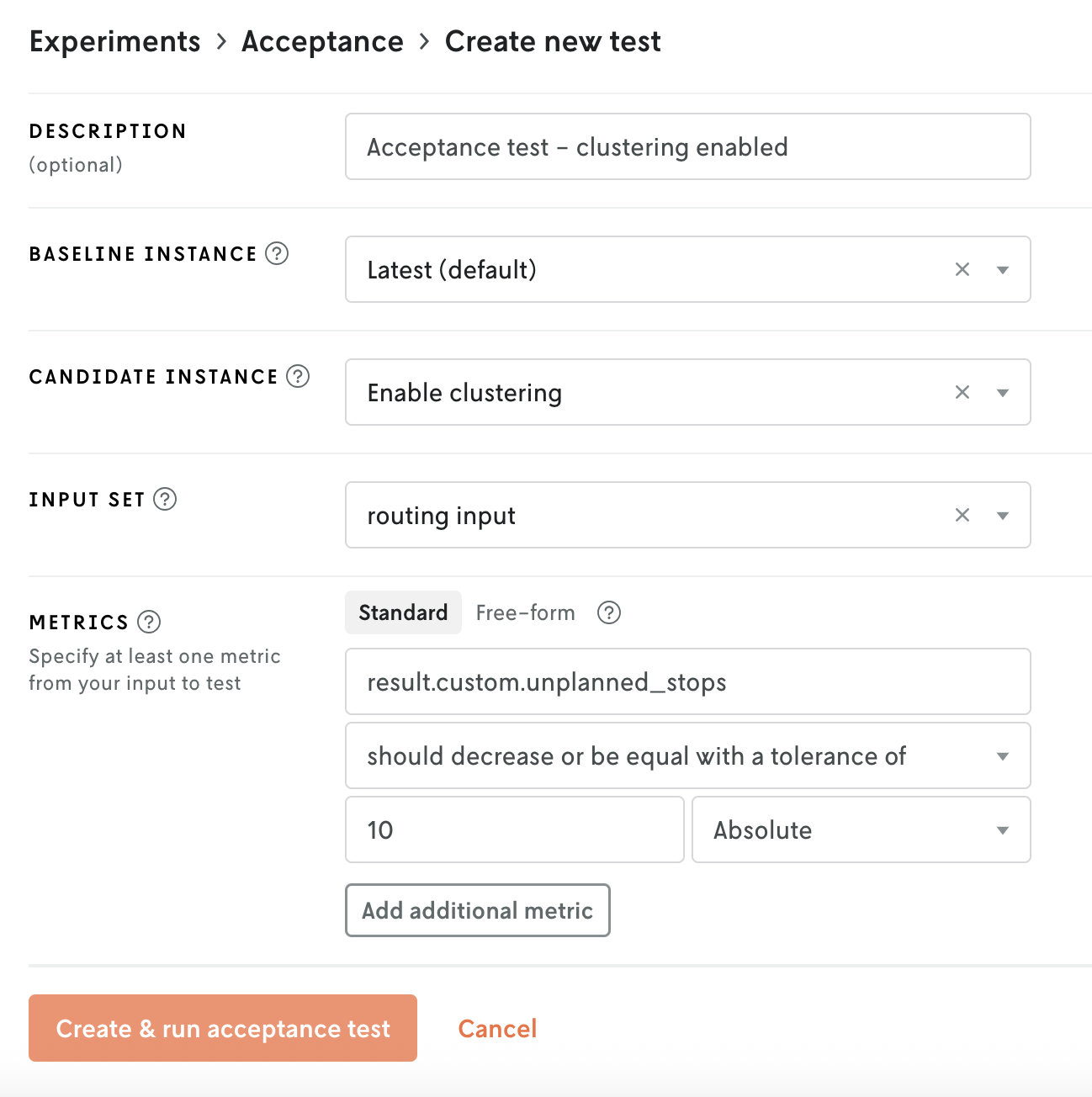

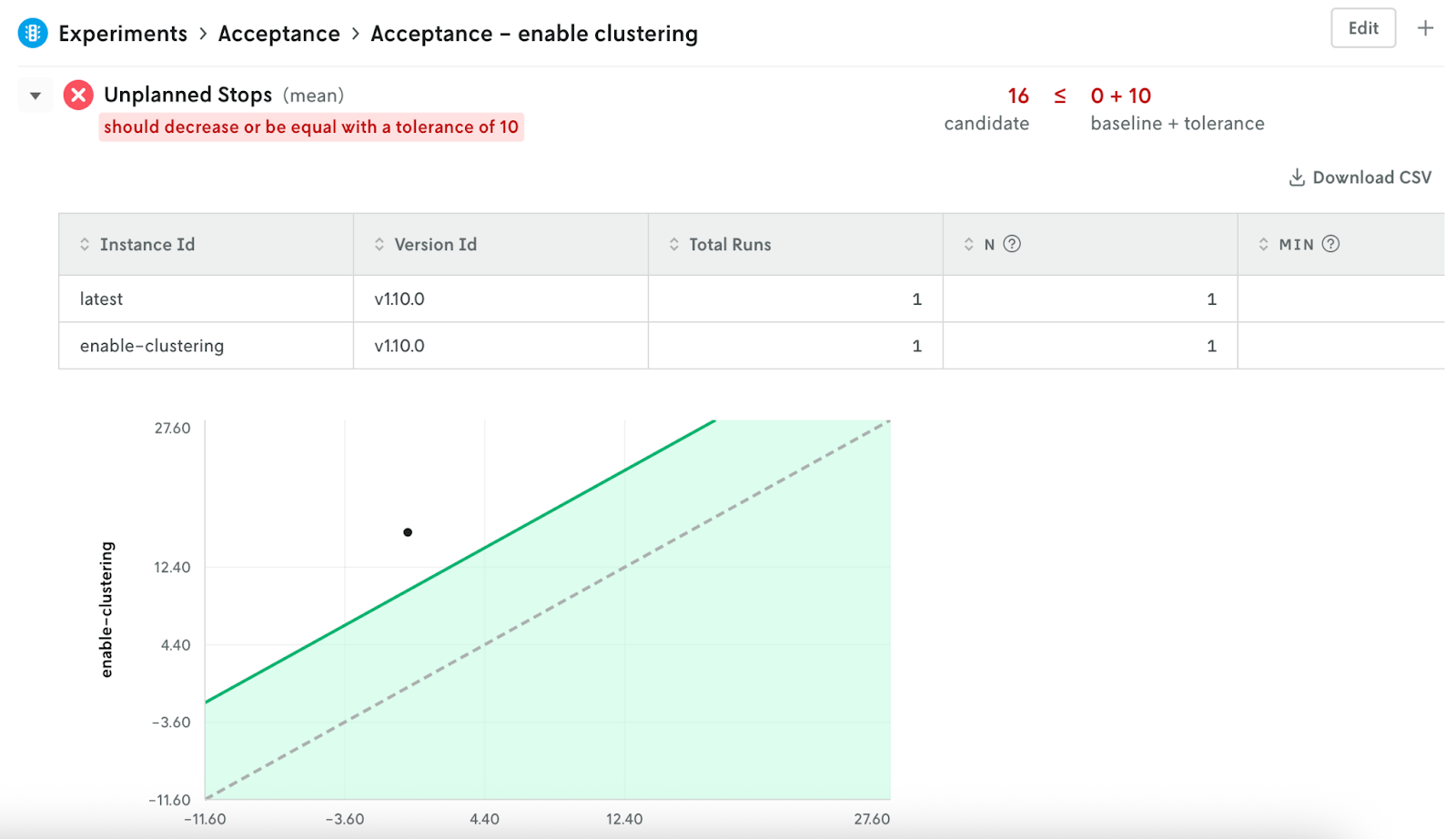

Acceptance tests allow you to verify if business or operational requirements (e.g., KPIs and OKRs) are being met. Is the new model ready for production? Is it meeting all of our thresholds? Using acceptance tests is a strategic way to incorporate CI/CD into your DecisionOps workflow.

For us to move forward with the routing model for all regions, the candidate model (with clustering enabled) must have 10 or fewer unplanned stops than the baseline model (without clustering enabled) using a routing input set from all 20 of our regions.

In the results, we see that this acceptance test failed. The candidate model with clustering enabled resulted in 16 more unplanned stops than the current model when using an input set for all 20 regions.

Based on this failing test, we’ll need to identify which regions are returning a high number of unplanned stops and then take actions such as adding more vehicles, updating vehicle constraints (e.g., compatibility attributes), and/or removing clustering in those regions.

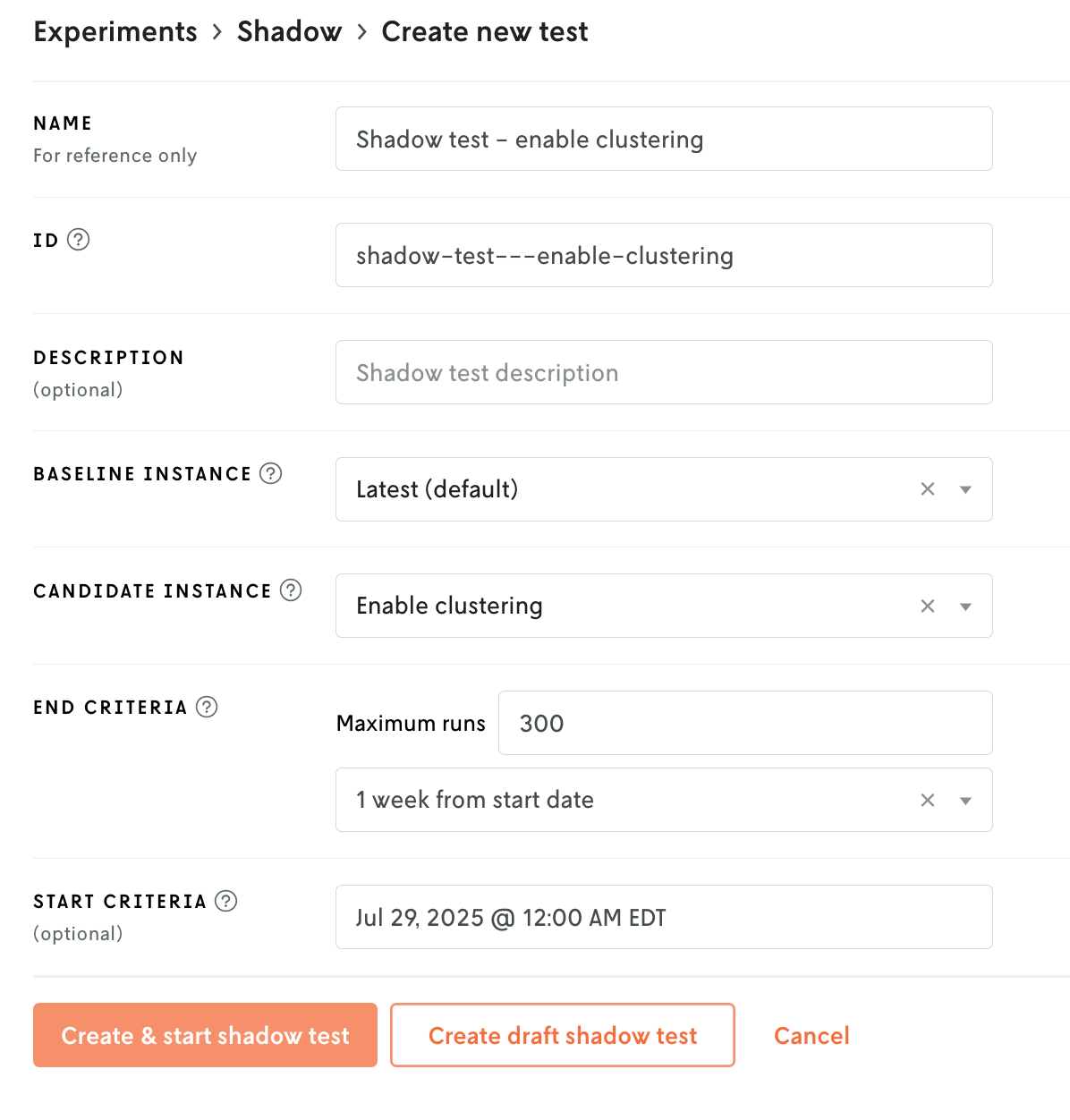

Shadow testing

Shadow tests allow you to see how a candidate model performs against the current model using production data without impacting operations. This means that during the time you specify, for every run you make with your baseline model, a shadow run will be made using the candidate model. Shadow tests can also serve as a fallback plan if something breaks with your model running in production.

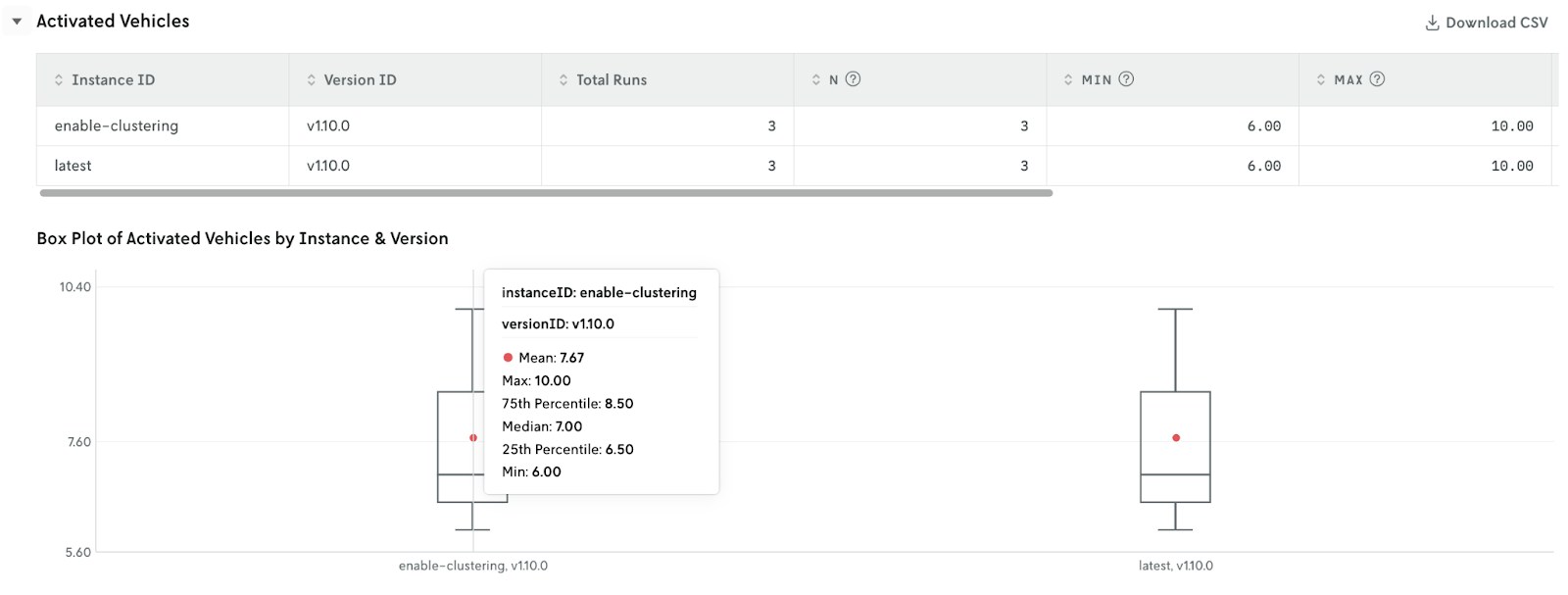

For our routing example, we’ve now successfully lowered the number of unplanned stops across all of our regions by updating compatibility attributes. We’re now interested in looking at how many vehicles are being activated with each model when using production data.

Using a small sample size of 3 runs for this example, we can see in the results that the same number of vehicles were utilized across both models using production data (with a minimum of 6 and a maximum of 10).

These results indicate that we can move forward with the candidate model without impacting the number of activated vehicles.

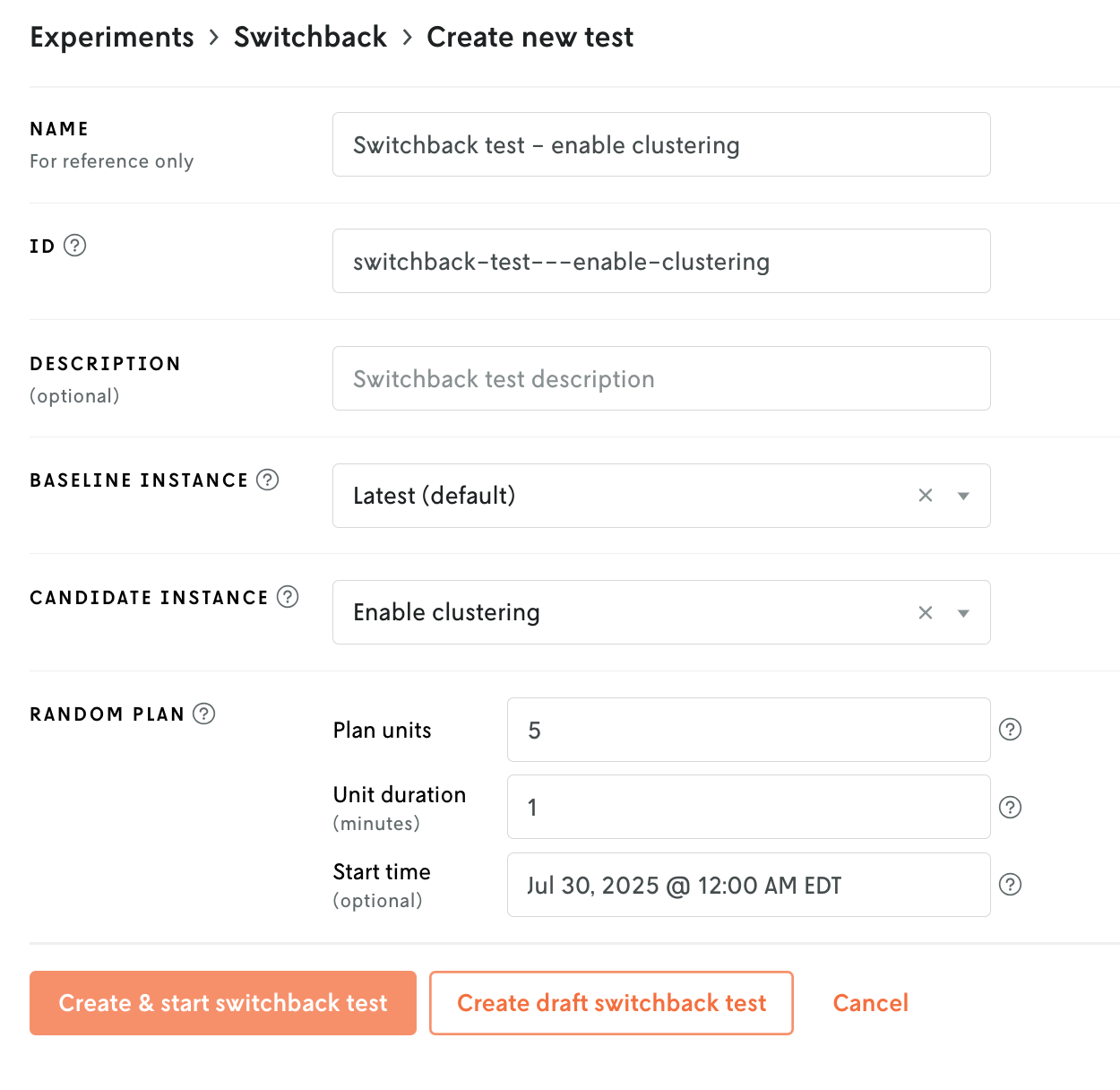

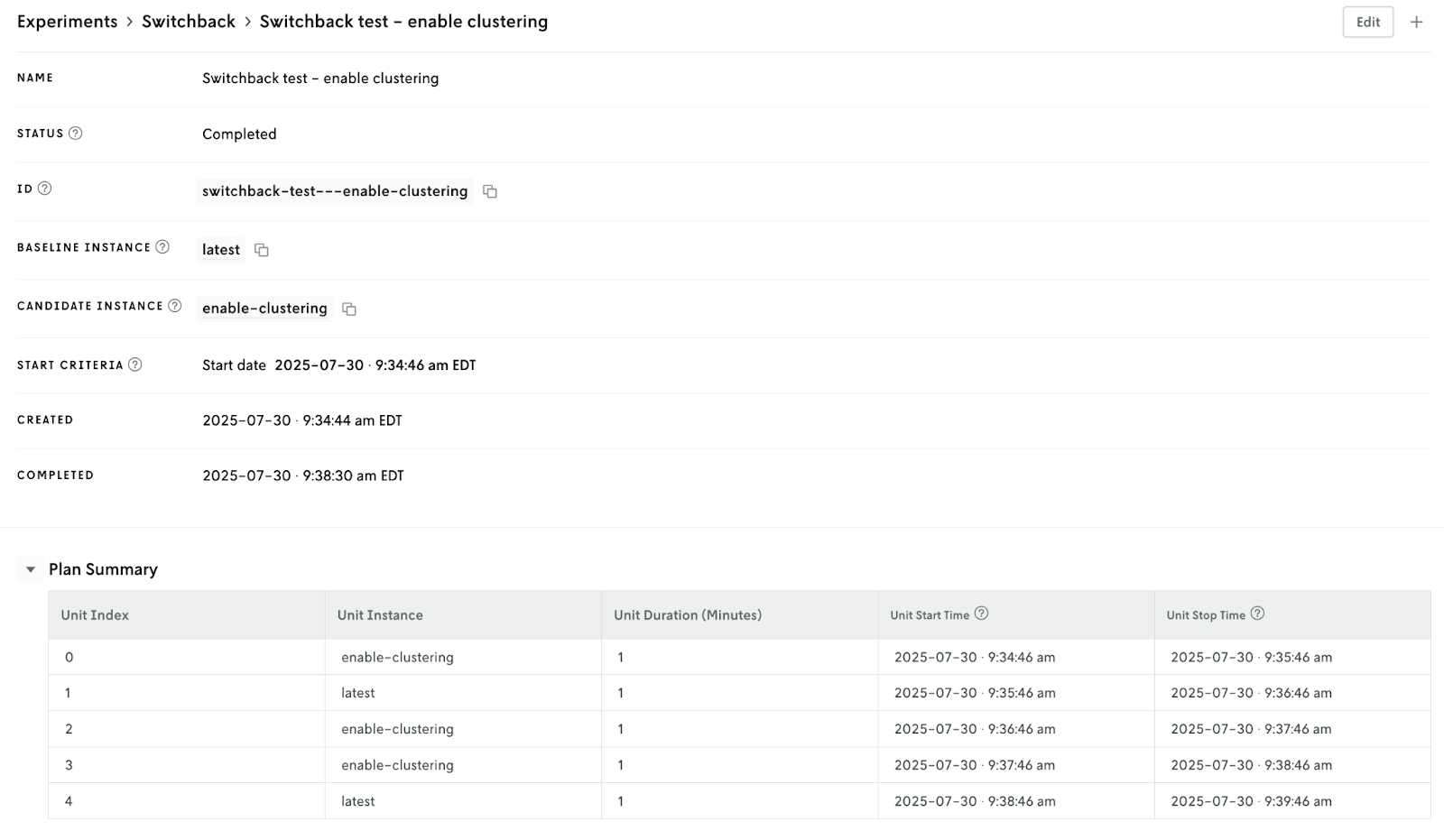

Switchback testing

Switchback tests allow you to switch between running the baseline and candidate model while making actual decisions in production. Similar to A/B tests, switchback tests create a randomized plan, but also account for network effects.

Wrapping up our routing narrative, we want to see how the candidate model performs on production data by making real-world decisions.

After creating the test, the plan will be presented, identifying when each model will be used.

We can then dive into the results per KPI, to determine if we’d like to push the candidate model to production.

Get started

Create a free account to get started with DecisionOps and testing on the Nextmv platform.

Have questions? Reach out to us anytime.